Introduction

One of the questions you might ask when exploring a new technology is how well it performs. In this article, we’ll dive into that and (hopefully) answer all your performance-related questions.

For these tests, we’re using our enterprise demo app, Bookstore, which you can find in this repository. The goal here is to measure how much of the system's resources a typical Jmix app consumes when handling a large number (relative to Jmix and other stateful applications) of active users' interactions.

Environment

Bookstore Adjustments

Before we dive into the results, let’s go over the changes we made to the application to set up performance testing.

1. Add Actuator and Prometheus Micrometer Registry

We added these libraries to expose the Prometheus Actuator endpoint at:

/actuator/prometheus:

// Monitoring

implementation 'io.micrometer:micrometer-registry-prometheus'

implementation 'org.springframework.boot:spring-boot-starter-actuator'

You can check out the rest of the endpoint setup and other minor adjustments in this commit.

2. Disable Vaadin Checks

To make the performance tests smoother, we disabled certain Vaadin security checks that aren’t relevant for this testing scenario. Specifically, we turned off XSRF protection and sync ID verification by modifying the application-perf-tests.properties file:

# JMeter tests support

vaadin.disable-xsrf-protection=true

vaadin.syncIdCheck=false

3. Disable Task Notification Timer

We disabled the Task Notification Timer to simplify the process of recording and emulating HTTP requests in JMeter. This allows us to focus on testing the core application functionality without additional background tasks affecting the results.

Tooling

For our performance tests, we used the following additional software:

- PostgreSQL database.

- Node Exporter to expose server metrics such as CPU loading.

- JMeter with Prometheus Listener to generate test load and provide client-side metrics.

- Prometheus to collect and store results.

- Grafana for visualization.

Now that we’ve checked the software configurations, let’s move on to the hardware.

Server Configuration

Both the application and database servers are virtual machines, each with the following setup:

Characteristics:

- Virtualization: Proxmox VE, qemu-kvm

- CPU: 8 cores, x86-64-v3

- RAM: 16 GB

- HDD: 200 GB

- OS: Ubuntu 22.04

Host Hardware:

- CPU: Intel Xeon E5 2686 v4 2.3-3.0 GHz

- RAM: DDR4 ECC 2400 MHz

- HDD: ZFS, Raid1 2-х Sata Enterprise SSD SAMSUNG MZ7L33T8HBLT-00A07

While average, the virtualized setup is capable of handling typical enterprise applications efficiently. For higher loads or more complex systems, further optimization may be needed, but this configuration works well for most internal apps.

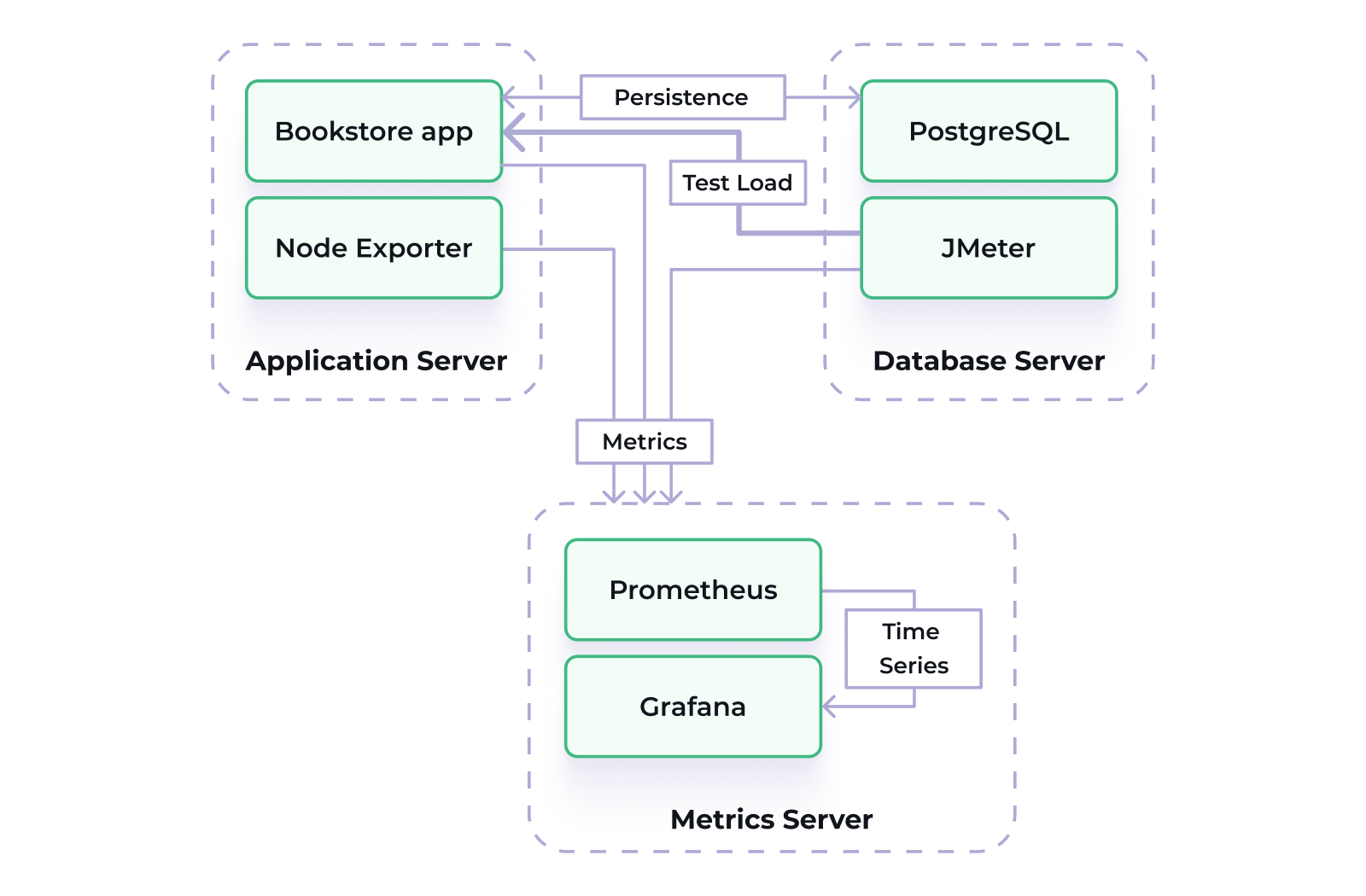

Infrastructure

We’re using three servers in total: two for running tests and one dedicated to collecting metrics:

- Application Server contains the Bookstore application, which is run as a boot jar, and a Node Exporter.

- Database Server contains a PostgreSQL database and a JMeter application. This server stores persistent data and creates a test load.

- Metrics Server includes Prometheus and Grafana applications. Collects and processes metrics.

By distributing the workload across dedicated servers for the application, database, and metrics, we ensure efficient resource use and accurate data collection. Let’s move on to the test itself.

Test

The JMeter HTTP(S) Test Script Recorder was used to create the required amount of load without using many resources on web clients. This approach allows a sequence of HTTP requests to be recorded and then replayed in a large number of threads simultaneously using a small amount of resources. However, it is not possible to react to changes in real-time as a full-fledged Vaadin client does. Therefore, a simple testing scenario was used in order to avoid errors and incorrect requests.

Test plan has been created according to this instruction with several additions and modifications.

The test plan for each user (thread) represents an infinite loop of the following actions:

- Load login page

- Wait an average of loginWait milliseconds

- Fill credentials (hikari/hikari) and log in

- Open Order List View

- Click on "Create" button to open Order Details View with new Order entity

- Select a customer (one of the first 10 customers in the list, depending on thread number)

- Create 3 order lines by doing next actions:

a. Click on "Create" button in Order Lines block

b. Select a product

c. Click "OK" button - Confirm order creation by the click on "OK" button

- Wait an average of logoutWaitAvg milliseconds

- Log out

In order to emulate user actions realistically, there is an average clickPeriodAvg millisecond waiting time between each user click and the previous request. Login and logout are required for each loop due to the limitations of the HTTP request approach. Logging in is a heavy operation and needs to be balanced with idle time (loginWait,logoutWait) to make the test more similar to normal user actions.

Test plan parameters can be specified using -J<parameterName> command-line arguments during the test run. E.g.: -JthreadCound=500. The next parameters are available:

| Parameter Name | Default Value | Description |

|---|---|---|

| host | localhost | Bookstore app host |

| port | 8080 | Bookstore app port |

| scheme | http | Bookstore app scheme |

| threadCount | 1000 | The number of users performing the test steps simultaneously. |

| rampUpPeriod | 60 | How long does it take to "ramp-up" to the full number of users, s. |

| clickPeriodAvg | 2000 | Average period between clicks, ms. |

| clickPeriodDev | 1000 | Click period min and max deviation, ms. |

| loginWait | 10000 | Time to wait before login, ms. |

| logoutWaitAvg | 30000 | Average period to wait before logout, ms. |

| logoutWaitDev | 10000 | Before logout period min and max deviation, ms. |

Now that we can emulate the interactions that we’ll track we can check the metrics that will matter based on these interactions.

Metrics

To assess the performance, we tracked several key metrics throughout the test to provide a view of how the system behaves under load:

- View loading time has been measured by HTTP response time for all queries involved in view loading and initialization. JMeter Prometheus listener (see the test plan) provides these metrics. The following queries are related to the actions:

- Open List view: LoadOrdersListView-34, OrderCreation-37, OrderCreation-38

- Open Detail view: CLICK-create-new-order-39, OrderCreation-40, OrderCreation-41

- Save entity and return to the list view: CLICK-save-order-70, OrderCreation-71, OrderCreation-72 - Average entity saving time is measured by Micrometer registry timer in OrderDetailView.java#saveDelegate

- CPU usage metric is provided by Node Exporter on the application server.

- Average heap usage metric is provided by Micrometer registry.

- Allocated heap memory is set by -Xmx key on application start.

These metrics were selected to give us a well-rounded picture of the application’s performance and resource efficiency.

Running and Measurement

Environment Setup

First up, we installed Node Exporter on the application server using a tarball, setting the stage for real-time performance monitoring. Meanwhile, on the database server, we deployed PostgreSQL 16.3 via Docker, pulling from the official image to ensure everything runs smoothly.

Next, we brought JMeter 5.6.3 into the mix, also on the database server for load testing magic. Our metrics server was transformed into a powerhouse with Docker as we deployed Prometheus using a tailored configuration. To top it off, we added Grafana 11.1.4 to the metrics server, following the official documentation to for a bit of visualization.

Building and Running the Application

With our environment in place, it was time to launch the application! We built it with the command ./gradlew build -Pvaadin.productionMode=true bootJar, ensuring it was optimized for production. Then we copied the output jar to the application server and launched it using the command:

java -jar -Dspring.profiles.active=perf-tests -Xmx<N> jmix-bookstore.jar

where <N> can be set to 2g, 5g, 10g, or 14g, depending on how much memory we wanted to allocate.

Test Running

The test plan is started on the database server using the command below:

./jmeter -n -t path/to/OrderCreation.jmx -l logs/log.txt -JthreadCount=1000 -Jhost=<app_server_ip> -JrampUpPeriod=230

This command runs the JMeter test plan on the database server. It simulates 1,000 users interacting with the application, gradually increasing the load over 230 seconds, and logs the test results in log.txt.

Measurements

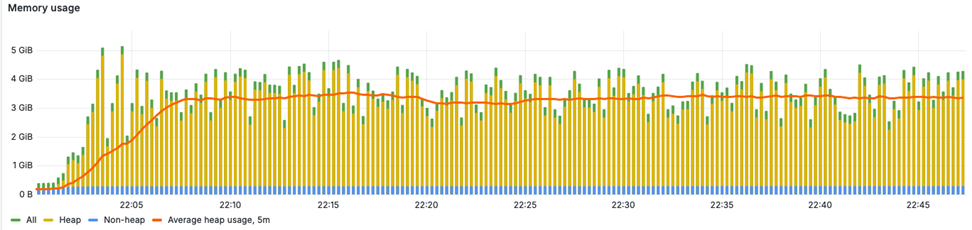

Let's look at the visualization of the results for test with 5GB of heap size and 1000 users working according to the described test plan with default parameters and rampUpPeriod=230 seconds.

Memory

Memory usage can be visualized by metric jvm_memory_used_bytes and looks like:

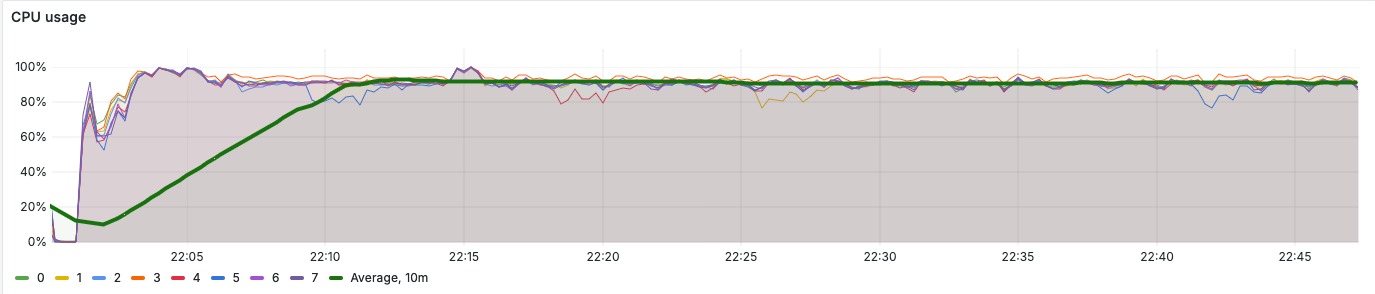

CPU Usage

CPU usage is measured by node_cpu_seconds metric by summing all modes except "idle":

As we can see, all 8 cores of the processor are almost fully used (90%). The same distribution is observed for all other tests for 1000 users with default test plan parameters and different allocated memory volumes.

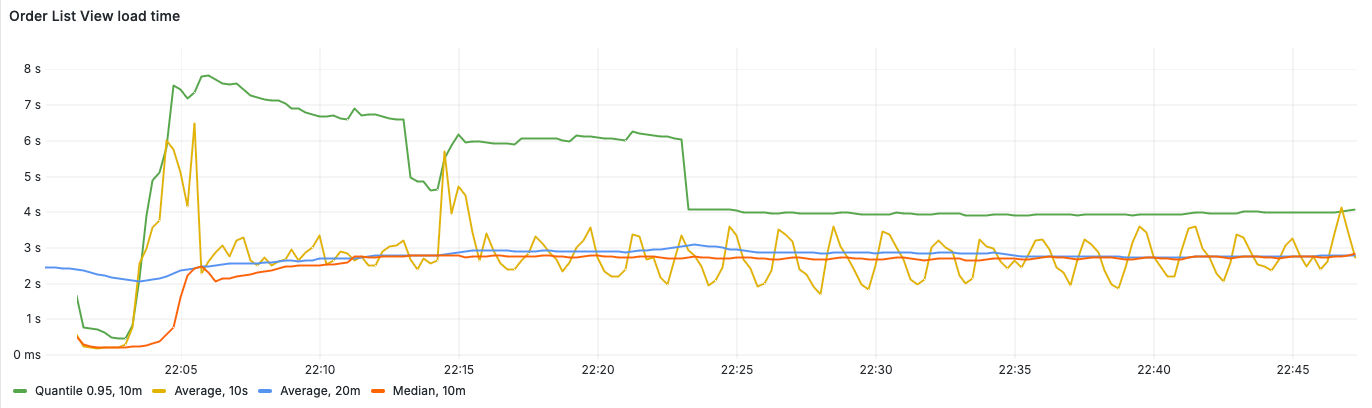

View Loading Time

Average view loading time is measured by calculating rate of jmeter_rt_summary_sum metric and summing results for each query involved in particular view loading:

rate(jmeter_rt_summary_sum{label="LoadOrdersListView-34"}[10s]) / rate(jmeter_rt_summary_count{label="LoadOrdersListView-34"}[10s])

and then summing using Grafana expression.

Median value can be obtained by selecting 0.5 quantile of the metric:

sum(jmeter_rt_summary{label=~"LoadOrdersListView-34|OrderCreation-37|OrderCreation-38", quantile="0.5"})

For the Order List View load time looks as follows:

There is an initial increase in load at the beginning of the test, which later stabilizes after a few minutes. Therefore, we will exclude the first 10 minutes from the analysis and focus on the average resource consumption during one of the subsequent periods when the value remains relatively constant. This typically occurs around the 40-minute mark of the load test.

For OrderListView the average load time is 2.8s (for 1000 users and 5GB of heap size).

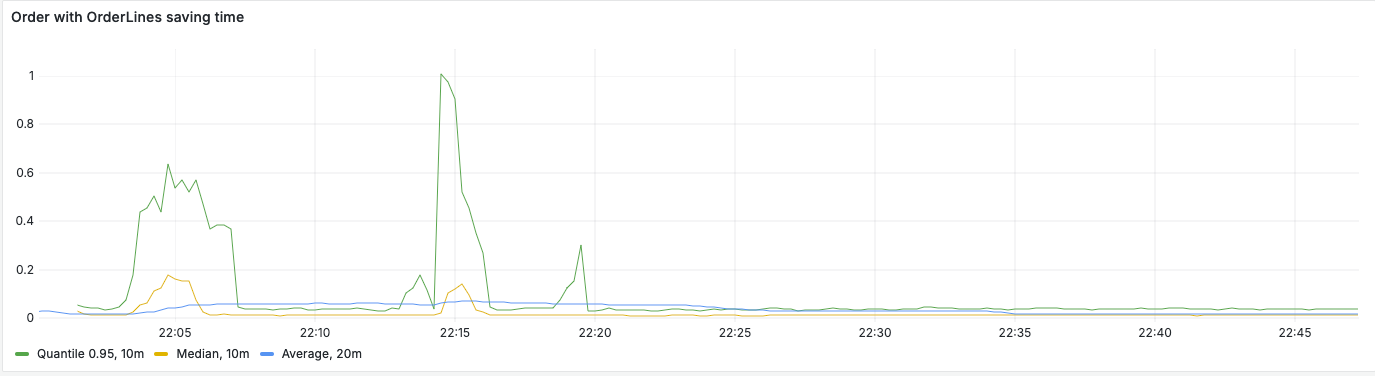

Entity Saving Time

We can observe the Order Details DataContext saving time using jmix_bookstore_order_save_time_seconds metric:

Average entity saving time is relatively small (0.015s) when compared to the view loading time.

Results

We ran the tests with different heap memory sizes of 2, 5, 10 and 14 GB. In each case, 1000 users worked according to the scenario described above with the following parameters:

- loginWait = 10s

- logoutWaitAvg = 30s

- logoutWaitDev = 10s

- clickPeriodAvg = 2s

- clickPeriodDev = 1s

- rampUpPeriod = 230s

The results of measurements are shown in the table below.

| Allocated Heap Memory (GB) | Average Used Heap Memory (GB) (%) | List View Average Load Time (s) | Detail View Average Load Time (s) | Save Order and Return to List View Average Time (s) | Order Saving Average Time (s) | CPU Usage (%) |

|---|---|---|---|---|---|---|

| 2 | 1.74 (87%) | 7.2 | 3.6 | 1.5 | 0.300 | 94 |

| 5 | 3.4 (68%) | 2.8 | 2.5 | 1.5 | 0.015 | 90 |

| 10 | 5.6 (56%) | 2.3 | 2.1 | 1.3 | 0.014 | 91 |

| 14 | 6.6 (47%) | 2.2 | 1.9 | 1.2 | 0.017 | 89 |

Limitations

Compared to a real usage of the application, the performance tests have the following limitations:

- All users log in with the same credentials.

- All users repeat the same scenario.

- Image loading is disabled to simplify the HTTP requests used in the scenario.

- Task notification timer is disabled for simplicity.

Putting Results into Perspective

Given the limitations, the application's peak performance aligns with findings from a Nielsen Norman Group paper, which suggests that response times under 3 seconds are generally acceptable for user satisfaction. In practice, the less time users wait to interact with the system, the better the experience. However, each and every person decides what’s the optimal response time for him based on their performance-related NFRs.

The results show that increasing heap memory from 2GB to 5GB noticeably boosts performance, cutting load times across views. Beyond 5GB, the gains are minimal. It's important to consider that factors like network latency and server load can add around a second to response times. Therefore, trying to optimize load times below 1.5 to 1.7 seconds may not be worth the extra investment.

With that said, we don’t recommend using less than 2GB of RAM if you’re building a medium-sized application with 1000 expected users. For most applications with similar configurations, 5GB hits the sweet spot between performance and resource use.

Conclusion

These tests provide clear insights into how the application scales with different memory configurations. While results may vary depending on your hardware and workload, they highlight optimal setups for smooth performance. Jmix may not be the best choice for applications with massive user traffic like Amazon or Facebook, but it excels as a quick and flexible solution for internal tools or smaller-scale applications like custom CRMs, admin panels, and other business tools.